The Top 5 Mistakes You Make Building Software And How To Avoid Them

Building good software is hard. I think most of us in the industry, whatever our specific role and primary area of experience, know this to be true.

It's hard to ship software of the right quality, free of defects. It's hard to ship software on time. It's hard to ship software within budgets and costs. It's hard to ship software which delivers the right experience for its users.

But there's something about these truths I've observed throughout my career which I want to share and explore in this post.

As a tech guy and a developer, one question I'm very commonly asked in interviews is to talk about an example of something I've achieved which was challenging. Sometimes it's framed in the language of programming, directly; "what's the most challenging thing you've ever done or built with this language?", other times it's more situational; "What kind of challenges did you face in a recent project and how did you overcome them?"

I think a lot of developers at this point have a natural temptation and inclination to start speaking in our arcane technical language, maybe describing something about how we built and managed a complicated, distributed architecture, or shoehorn in something about data structures and algorithms. Show off our credentials, as it were.

But I don't think this is honest. The truth is that technology today is pretty decent. Computers are fast, storage is cheap, programming languages are sophisticated and high level, backed by millions of prepackaged building blocks in the form of open source components. I'm not saying there's no innovation and that we don't sometimes have to be creative, but if you're working on services which operate at any scale smaller than FAANG, there are very conventional and vanilla technical solutions to probably 99% of anything you will ever conceivably be trying to do.

This tells us something very important.

The overwhelming majority of challenges we face in building good software are not technological, they are organisational.

I'm not saying this because I'm a tech guy, by the way. This isn't an attempt to abrogate the responsibility and role of developers, to sit there and say "It's not me, it's you." Developers are part of the team. Team failings are our failings too.

What I am saying is that all too often, there is a failure of collaboration and communication which directly causes the challenges we face. Sometimes that failure starts at the top and flows down, sometimes it goes the other way. Sometimes it goes sideways and outside the organisation too.

But throughout my career, there are a few particular mistakes I've seen repeated in multiple contexts. These are the top five failures I want to explore today.

Failure of methodology

What I would otherwise describe as using the wrong people in the wrong places. Failure of methodology occurs when businesses take a top-down approach to building software, both in the organisation of teams and flow of information about the system being built.

The most obvious example of this mistake is adopting the tried and tested waterfall approach to building software. Waterfall, where we develop software in a linear sequence of requirements analysis, design, development and testing through to release, is tried, tested and failed. Again and again.

This is not a case where when it comes to building better software, the jury is still out on other approaches regarded as experimental. The science has been done, the results are in and we know very reliably that waterfall doesn't work.

But the same failures of methodology still creep in even when a business believes it isn't doing waterfall. "Waterfall-in-agile", where team management and processes constrain attempts to embrace more agile ways of working, result in loss of collaboration, longer release cycles, hierarchical decision making and poor objectives / expectations.

This is a pattern I've seen many times. You're not agile just because you have a mandatory meeting each morning you nominally refer to as a stand up. You're not working in iterative sprints just because you're measuring how many tickets you completed in a fortnight. You're not adapting to change if you have a PM prioritising and doling out specific tickets to team members like a lunch server doling out bowls of soup in a canteen. You're not collaborating if you're siloing developers from testers, from clients, from business analysts and treating all these people as members of different teams.

I understand why it happens. The general rule in business is that things which are rigidly, extensively planned upfront are predictable. You know your costs, you know your resources, you know your deadlines and you know your profit margin.

But how many software projects do you need to fail, where outcomes didn't match those predictions, before you ask if you're valuing the wrong things?

The way to avoid these failings is to embrace the values of agile philosophy and cooperatively build your methodology around them. I'm not advocating that you instead rigidly stick to Scrum or some other framework; this is the other mistake businesses commonly make. In an attempt to claw back some of that control and predictability, a whole industry of rigid processes and tools have sprung up around something which specifically tells us to value people and interactions over these things.

What I urge businesses to understand is that better software (and therefore better outcomes for the business, in terms of cost and profit) is better achieved by people talking, frequently. By iterating frequently, by getting feedback frequently, by being unafraid to change priorities and direction frequently. Your developers should be on the calls with clients. Whether they're external customers or stakeholders elsewhere in your organisation, clients should be considered part of the collaborating, cross functional team.

You would never countenance a surgeon, anaesthetist and nurse carrying out an operation on a patient and refusing to talk to each other. Embrace the diversity of your people and skills and empower them to make decisions as a team.

Failure of architecture

This one is about using the wrong tools in the wrong place, whether those tools are other pieces of software, design patterns in your code, or the infrastructure keeping your product available.

We have a lot of axioms and aphorisms in software. Some of them occasionally contradict each other. But there's one in particular that has really guided me. It's the first and foremost principle I apply to anything I work on, anything I try to build. This is the KISS acronym; Keep It Simple, Stupid.

Less is more. I think the most obvious and egregious examples I've seen of failure in architecture all fall under premature complexity. This creeps in to all aspects of product development, from scaling before you need to scale, from attempting to refine UX before you have users to give you feedback, to delivering features no one asked for.

I've seen projects start with React and Next which could and would have been better done with simple server-side HTML and a few lines of plain JavaScript. I've seen conversations about lambdas, Dynamo, S3 and Kubernetes to facilitate unlimited horizontal scaling for products with zero users. I've seen perfectly good solutions to what are actually, on the scale at which web services operate today, very small problems be overlooked simply because they're the "old fashioned" way of doing things.

More often than not, those solutions would also have been the quickest, cheapest and most effective way of delivering value through working product.

I remember someone once asking me if it was okay to have 250,000 rows in a MySQL database, as if it's still 1993 or something and computers will struggle to count higher than 64k. If your product doesn't have any users yet, or its users can be measured in the thousands, the answer to which database system you should use is literally any of them. Start on a SQLite database if you want, there is no wrong answer here. Make the right architectural choice to separate your application logic from your data access logic, you can scale later as and when you actually need to.

On a similar vein, the number of times in recent years I've seen microservices by default is worrying. In the same way frameworks like React introduce a plethora of organisational, operational and engineering complexity which must be justified before it's put in place, distributed systems introduce the problems of consistency, availability and tolerance. These are not easy problems to solve and you shouldn't be trying to solve them unless your requirements truly mandate it.

I would rather work on a well designed, modular monolith than a poorly designed network of microservices any day.

The way to avoid these mistakes is to remember KISS. It should be your North Star, your guiding light. The very first question I ask myself and any team for any architectural decision is "Are we making this more complicated than we need to?" - don't use a sledgehammer to crack a nut.

The other advice I have for avoiding architectural failure is to be flexible in your choice of tooling. It's often very tempting, for example, in web development to fit any project into the technology and tooling that you know. We shouldn't be afraid to say actually, maybe this CMS worked well for half a dozen projects but it might not be the right choice for this project, even though we know it well and we know we can support it.

Choosing the wrong tools will inevitably lead you to an increasing curve of development complexity and maintenance hell as you try to fit square blocks in round holes.

I see a lot of people say "the right tool for the right job" - we either need to mean that, or be honest about what we can do with the tools we have if we don't.

Failure of measuring success

This mistake is all about focusing on the wrong outcomes, or giving weight to the wrong ideas.

One example of this failure is vanity metrics. These are metrics which may look good out of context but aren't reflective of delivering value; they don't really contribute to success. What is or isn't a vanity metric will vary depending on context, but it might include things like reactions or engagement on social media, hits to a web page, or number of times an app has been downloaded, when we should have been looking at completed registrations, sales or user churn.

Another failure of measuring success can occur during internal process. We count things like tickets completed, sprint velocity, number of commits or merges in a release, that sort of thing.

Other times we only focus on short term gains, such as was an initial project release delivered on time? Maybe it was, maybe it was even within budget, but if we've not paid attention in the right places this initial release may represent only the first stage of our ongoing cost with the project. Perhaps we've released something full of bugs, perhaps we made those poor architectural choices and it's now harder, longer and more costly for us to add the next set of features requested or fix problems.

While it's important not to fall for failures of methodology, it is vital that we understand the goals and objectives of our software from the start. This isn't the same as the granular, inflexible requirements produced in waterfall. Goals should be easy to define. Think of them like the dramatic concept of a novel - if you can't aptly sum it up in a sentence, two at a stretch - it's because it hasn't been adequately pinned down.

The way to avoid this mistake, then, is to understand the why before the what and how. This will help you select the right metrics to tell you throughout an iterative lifecycle whether you remain on track.

Failure of adaptation

One of the core values of agile is responding to change over following a plan. The way I like to envision and explain what this value means to me is by talking about feedback.

Failure to adapt - and this is not even specific to software - occurs when we fail to listen to what people around a project are telling us. This is both internal and external, feedback comes from every angle. We need to listen to the feedback of fellow team members, of business stakeholders and users / customers.

The ethos of adaptation is saying when something isn't working for us, change it. But this is harder than it sounds. All too often we find ourselves in situations where the pressure or even temptation is to say when something doesn't work, try harder to make it work and maybe find some people to blame for why it didn't. Or accept a failure and then spend more resources purporting to analyse that failure only to keep your processes and ways of working exactly the same and make the same mistakes again next time.

Effective teams regularly review and change their working practices by taking learnings from each iteration of product, both in terms of feedback from the team and feedback from the customer.

Embracing feedback requires humility and a willingness to acknowledge that our initial assumptions or plans may not always align perfectly with reality. It's about recognizing that every piece of feedback, whether positive or negative, is an opportunity to learn and improve.

Moreover, the ability to respond to feedback in a timely manner is essential for maintaining momentum and adaptability. This means creating a culture where feedback is actively sought out, welcomed, and acted upon. It means fostering an environment where team members feel empowered to voice their concerns or spend time on discovery work to compare approaches.

In practical terms, responding to feedback often involves making adjustments to the product backlog, reevaluating the priority of tasks or even pivoting to a different direction altogether. Don't be afraid to throw away work that isn't working. Provide regular opportunities for teams to reflect on their progress and make course corrections.

When we don't do these things, when we fear change instead of embracing it, we make the mistake of failing to adapt.

This inevitably results in missed opportunities, lost market share and diminished profitability. Moreover, in an increasingly customer-centric environment, failure to adapt to evolving user needs and preferences leads to dissatisfaction, user churn and reputational damage.

Failure of adequate testing

This is number one for me. It's the biggest mistake I see businesses make in building and shipping successful software products.

I wasn't always an advocate of test driven development. In fact, I feel very embarrassed to say this now, but early on in my career as a more junior and less experienced developer, I didn't see the point of writing automated tests at all.

After all, we're still going to test it, right? And if a user discovers a small bug here and there, we can fix it. What does it matter if our testing phase involves a human QA process, using the application in a browser? Surely they're better placed to do the job of verifying that requirements have been met than a developer, who should just be writing code and then stepping back? It's much quicker and more effective to just write code without tests, get the feature out, then fix anything QA raise as a defect.

Oh sweet summer child, how naive art thou.

One of the arguments I often see today against automated testing (or against TDD as a methodology for building well tested software) is that writing tests adds time, complexity and cost upfront to development.

People who've been successfully practicing TDD for some time will reject this notion entirely. Me, I'm sort of happy to meet you in the middle and acknowledge that where teams don't have a huge amount of embedded experience in automated testing or test-first methodology, there's at least a bit of a learning curve in approaching your projects this way. There's maybe a very small upfront cost in investing in the infrastructure of your test harness from day one.

It doesn't matter. Whether there is any cost (which may not be the case, depending on your team) to adopting test-first, it is utterly dwarfed by the savings and benefits you get out if it as the length and complexity of your project grows.

The proven reality is that with automated testing, the efficiency, quality and reliability (that is, accuracy to requirements) of what we produce and how often and easily we can ship it continually increases. You can't have that effective feedback loop, you can't have authentic CI/CD pipelines without tests. An accelerated feedback loop enables teams to iterate more quickly, deliver features faster, and maintain a consistent pace of development without sacrificing quality.

This is good for business. Automated testing isn't costing you anything, it's actually giving you back some of that nice, fixed predictability about costs, resources and goals.

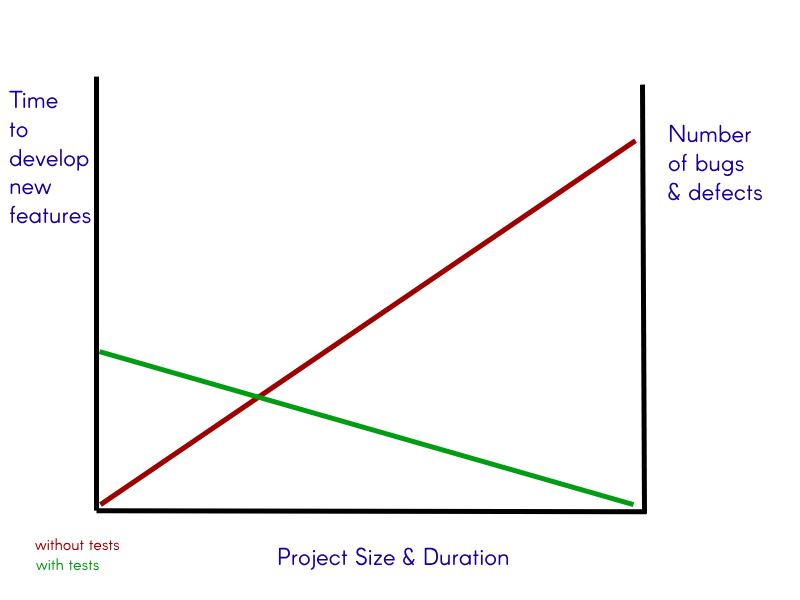

I want to end this post on a humorous but nonetheless salient point. Therefore below you will find I have mocked up a rough graph of what your project looks like with automated tests versus without them.

Crude, but this isn't a joke. The biggest mistake I've both made and encountered, countless times, in my career is failure to adequately test.

I'm not saying you must use TDD. I think you probably should and that you'll get the most value out of testing, the most flexible and adaptable architecture and the best understanding of evolving requirements if you do, but the key thing in avoiding this mistake is embedding the mindset of testing at the leadership level of your business, all the way down.

Untested software is broken software. It really is that simple.

Comments

All comments are pre-moderated and will not be published until approval.

Moderation policy: no abuse, no spam, no problem.

Recent posts

Re-examining this famous puzzle of probability and explaining why our intuitions aren't correct.

musings

Keep your database data secure by selectively encrypting fields using this free bundle.

php

Buy this advertising space. Your product, your logo, your promotional text, your call to action, visible on every page. Space available for 3, 6 or 12 months.

Learn how to build an extensible plugin system for a Symfony application

php

The difference between failure and success isn't whether you make mistakes, it's whether you learn from them.

musings coding

Recalling the time I turned down a job offer because the company's interview technique sucked.

musings